MCP is NOT a data technology — It’s the language of interaction between AI and data — structured or unstructured.

1. Introduction to MCP: The beginning of a Paradigm Shift

Over the past two decades, the enterprise data ecosystem has evolved from manual data entry systems to cloud warehouses and sophisticated BI dashboards. Yet the process remained largely static: data had to be collected, structured, cleaned, and visualized — often through layers of human-driven tools.

Now, that model is breaking down.

Enter the Model Context Protocol (MCP) — a transformative standard that redefines how AI systems communicate with data. Unlike data technologies that store or transform, MCP enables understanding.

It’s not another analytics engine — it’s the universal language of interaction between intelligence and information.

For companies that built their business on data integration, warehousing, or visualization, this is not just another innovation cycle. It’s a paradigm shift.

The last major leap in enterprise data occurred when companies moved from on-premise databases to cloud platforms — a transition that enabled global scalability but didn’t change how we think about data. The Model Context Protocol marks the first real evolution in the relationship between data and intelligence. Instead of humans building bridges between systems, MCP allows machines to understand context and interact with information autonomously. This reduces friction across every stage of data usage — from ingestion to decision-making — and marks the beginning of what many technologists call the “Cognitive Infrastructure Era.” In this new paradigm, insight becomes an on-demand service rather than an output of complex engineering.

2. Understanding the Model Context Protocol (MCP)

At its core, MCP is a standardized communication protocol that allows AI models to safely and dynamically connect to external data sources, APIs, and tools. In simple terms: MCP lets an AI “talk” to your systems — fetch live data, interpret it, and return insights — all in real time. It removes the dependency on pre-built data pipelines or dashboards by enabling AI to directly query, interpret, and visualize data conversationally.

Think of MCP as the “USB port of the AI world.” Just as USB standardized how devices connect to computers, MCP standardizes how intelligent systems connect to data ecosystems.

The power of MCP lies not just in connection, but in standardization. For decades, every organization built its own APIs, connectors, and data contracts — leading to enormous duplication of effort and inconsistent security. MCP replaces this with a universal schema of communication between AI agents and data sources. This means that any compliant model, whether open-source or enterprise-grade, can interpret information in a uniform way. The implications are immense: interoperability, faster deployment, reduced vendor lock-in, and scalable automation. In the same way the Internet Protocol (IP) made global networking possible, MCP will make universal AI-data dialogue inevitable.

3. The Traditional Data Stack: How We Got Here

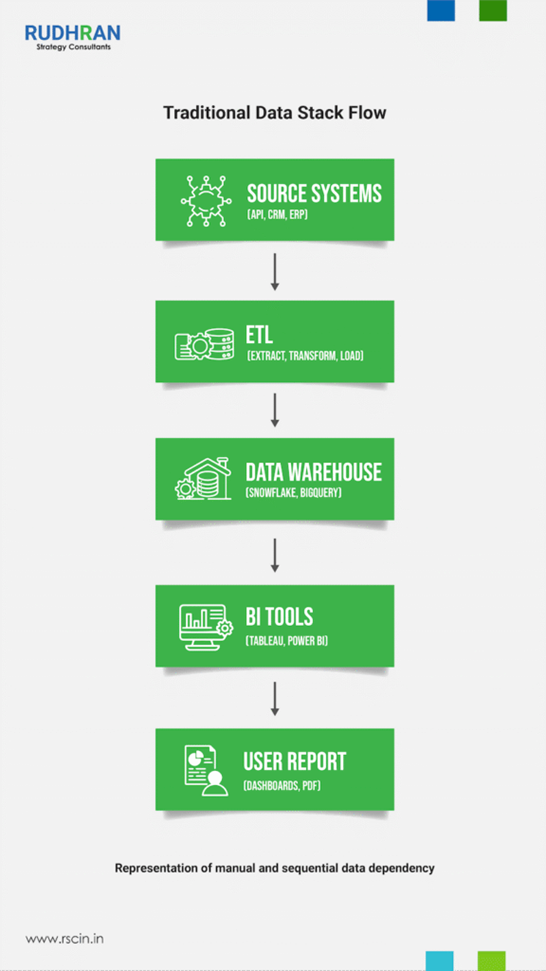

For over a decade, the traditional data infrastructure has followed a predictable model as shown in the figure below.

Traditional Workflow: Source Systems → ETL → Data Warehouse → BI Tool → User Report

Each layer introduced complexity — requiring specialized tools and skilled engineers to manage the flow. This led to the rise of platforms like:

- Snowflake (Data warehousing)

- dbt / Fivetran / Airbyte (Data transformation)

- Tableau / Power BI (Visualization)

- Consultancies like Kipi.bi (Integration and analytics setup)

These systems worked well — but they required time, maintenance, and human intervention. Every new insight meant another cycle of extraction, transformation, and dashboard modification.

This model evolved because enterprises once needed control and predictability — data lived in silos, infrastructure was fragile, and governance was manual. But every additional layer added latency, cost, and dependency. ETL pipelines could take hours; schema changes required entire sprint cycles; and dashboards often reflected what happened last month, not today. The resulting data bureaucracy slowed decision-making and inflated operational budgets. Most importantly, it separated the people who know the data from the people who need the insight. MCP collapses that gap entirely.

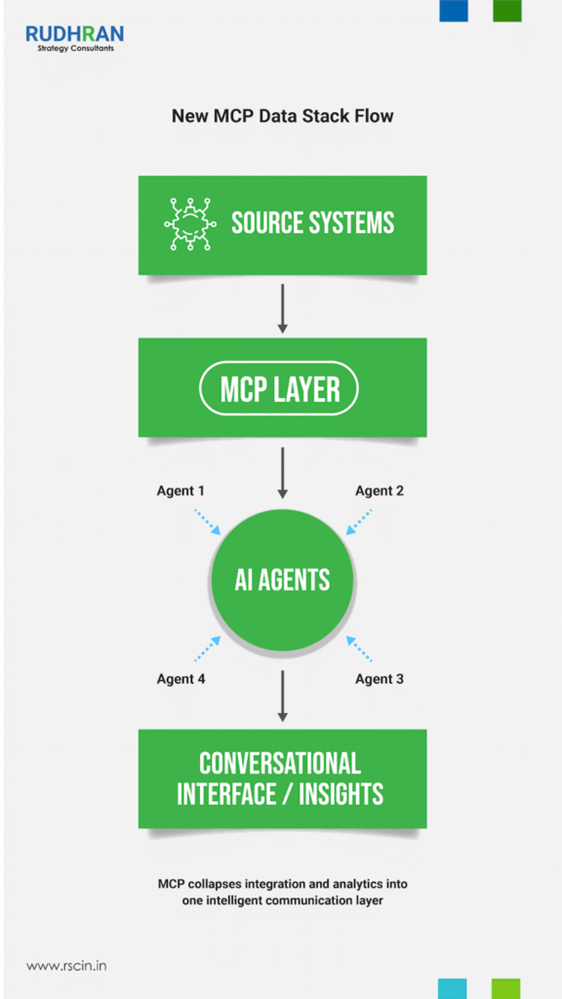

4. The MCP Revolution: Collapsing the Data Stack

MCP changes the fundamental premise: Instead of moving data to where analysis happens, MCP moves intelligence to where the data lives.

New Workflow: Source Systems → MCP → AI Agents → Conversational Interface

With MCP, there’s no need to predefine schemas or pipelines. AI models can:

- Connect directly to APIs or databases.

- Query live data securely.

- Generate contextual insights instantly.

This effectively collapses the middle layers — transforming ETL, warehousing, BI visualization into a single, real-time reasoning layer.

This collapse doesn’t just simplify architecture — it reshapes business economics. In a traditional environment, 60–70% of data budgets go to infrastructure maintenance, not analysis. MCP inverts that equation. By embedding reasoning directly within data access, companies can derive insights in minutes, not days. It’s an architectural philosophy that prioritizes cognition over construction — where the AI learns what to retrieve, why, and how to contextualize it before the data even leaves its origin. This is how organizations move from data-driven to intelligence-native.

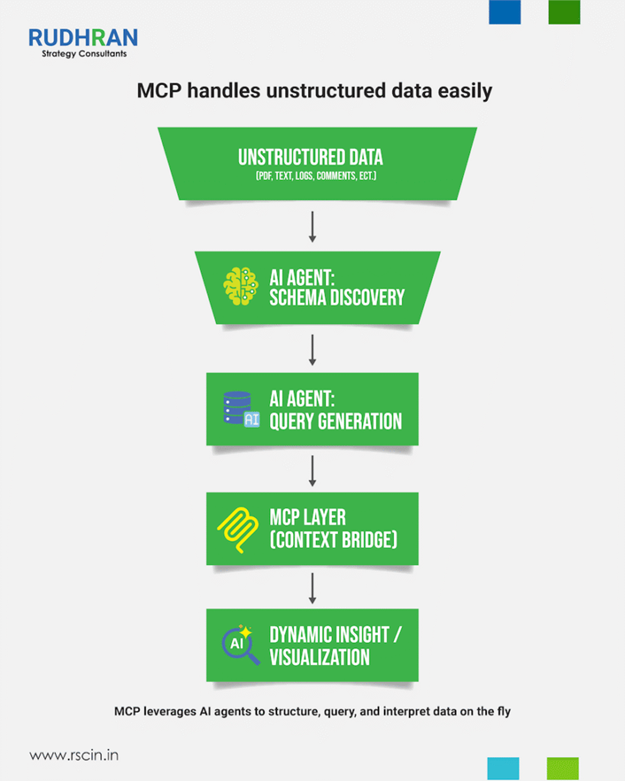

5. Structured vs. Unstructured Data: Breaking the Barrier

A common misconception is that MCP requires structured data. In reality, MCP can interface with AI agents that infer schema from unstructured content — converting text, PDFs, or logs into structured, queryable formats on the fly.

Example: SME Feedback Analysis

- Feedback forms (unstructured text) are fed to an AI “Schema Generator Agent.”

- The agent detects entities such as feature, sentiment, and category.

- It creates a temporary structured schema.

- MCP queries this structured layer dynamically.

- The AI summarizes insights: “70% of SMEs mention candidate communication as the top improvement area.”

This dynamic structuring eliminates the need for manual preprocessing — merging unstructured and structured data into one continuum.

Globally, over 80% of enterprise data remains unstructured — in emails, documents, chat logs, and feedback forms. Traditional analytics tools ignore it because it doesn’t fit into a neat schema. MCP, paired with multi-agent AI systems, allows enterprises to unlock this dormant knowledge capital. For instance, customer support transcripts can now be transformed into trend analyses, or compliance documents into real-time audit alerts. In practical terms, this means no data is wasted — every byte becomes interpretable when intelligence sits at the point of access.

6. Comparative Impact Analysis: Traditional vs. MCP-Driven Ecosystem

Below is a consolidated overview of how MCP redefines each layer of the traditional data lifecycle:

| Layer | Pre-MCP World | MCP World | Human Role | Survivors / Adapters |

| Data Integration | ETL tools, pipelines, consultants | Direct model-to-data access | Governance, compliance | Security & compliance vendors |

| Data Storage | Snowflake, BigQuery | Optional AI-native data hubs | Access control, lineage | Snowflake (AI-native version) |

| Transformation | dbt, Fivetran | Dynamic AI transformations | Validation | AI transformation governance |

| Analytics | Tableau, Power BI | Conversational AI analytics | Visualization for board reviews | Copilot-integrated BI tools |

| Consulting | Data consulting firms | MCP onboarding, AI governance | Strategic oversight | AI data orchestration experts |

This table illustrates a fundamental inversion of the data economy. The traditional data stack created value through layers; MCP creates value through context. Instead of building infrastructure to move data between systems, companies will compete on how intelligently their AI understands the relationships between data points. The result is a marketplace where tools that manage movement decline, while those that govern meaning rise. This also redefines talent demand — data engineers evolve into AI governance specialists, and consultants shift from dashboard building to model supervision and bias mitigation. The winners in this landscape will be those who evolve fastest from managing data to orchestrating intelligence.

7. The Impact on Key Industry Players

| Company | Core Function | Disruption Level | Recommended Pivot |

| Tableau / Power BI | Visualization | High | Integrate conversational AI copilots |

| Snowflake | Data warehousing | Moderate | Evolve into AI-native data hub (MCP endpoints) |

| dbt / Fivetran | ETL / transformation | Moderate | Build auto-transformation AI layers |

| Kipi.bi | Consulting & integration | High | Transition to MCP onboarding & AI data governance |

| Other Data Firms | Custom BI & dashboards | Very High | Pivot to AI orchestration and compliance |

Every data player today faces an existential crossroad. Visualization leaders like Tableau and Power BI were built for a world where data was looked at, not spoken to. Their survival depends on how fast they integrate conversational intelligence and MCP-based connectors. Snowflake’s evolution is more secure — it can remain the trusted “vault” if it transitions to an AI-native hub that exposes secure endpoints for MCP queries.

ETL players like dbt and Fivetran will need to transform their core purpose: from designing transformations to monitoring AI-generated transformations. But consulting firms like Kipi.bi face the toughest challenge — their business model was built on manual customization and integration. MCP standardization eliminates that friction. Their best chance lies in pivoting toward enterprise MCP deployment, compliance consulting, and AI orchestration. In this shift, standardization replaces specialization as the new source of value.

8. From ETL to EAI: The New Workflow

The classical Extract-Transform-Load (ETL) model is giving way to Extract-Analyze-Interpret (EAI) — where insight generation is instantaneous.

| Step | ETL (Traditional) | EAI (MCP + AI) |

| Extract | Pull from static sources | Pull via live API endpoints |

| Transform | Manual SQL/dbt transformations | AI auto-schema and normalization |

| Load | Into data warehouse | Dynamic in-memory context for AI reasoning |

| Analyze | Dashboard-driven | Conversational AI interface |

| Interpret | Human report writing | AI-generated narrative + visualization |

The ETL process made sense when data environments were stable and predictable. But in a digital-first economy, information changes every second — ETL cannot keep pace with that velocity. The shift to EAI represents a move from data logistics to data intelligence. Imagine a marketing head asking, “How did campaign performance correlate with sales calls in the last 48 hours?” In the ETL model, this request would trigger a pipeline update, a new query, and a dashboard refresh — taking days. In the EAI model, the MCP layer queries connected systems instantly, the AI interprets correlations, and the result appears conversationally — with contextual explanations. It’s the difference between waiting for information and conversing with it.

9. Multi-Agent Collaboration in MCP Ecosystem

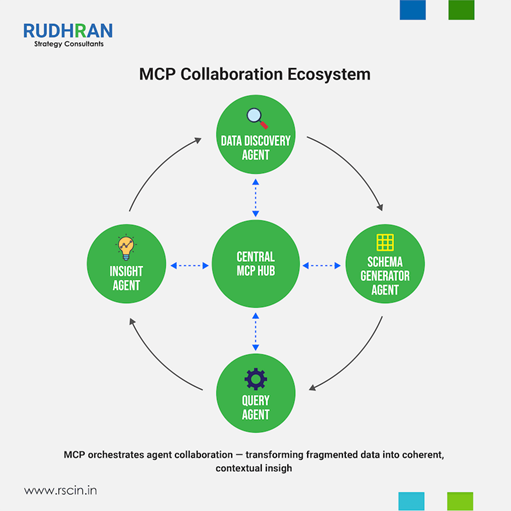

Roles of Agents:

- Data Discovery Agent: Locates and retrieves data (structured/unstructured).

- Schema Generator Agent: Infers structure and relationships.

- Query Agent: Executes dynamic queries using the inferred schema.

- Insight Agent: Synthesizes patterns and generates business narratives.

These agents replace the static “data pipeline” with a living, adaptive intelligence pipeline.

Multi-agent collaboration is the real engine of MCP. Each agent specializes in a function — but their coordination enables emergent intelligence far beyond pre-programmed logic. For instance, the Schema Agent might detect that two datasets share similar entities, prompting the Query Agent to cross-correlate them automatically. Over time, the Insight Agent can learn which insights matter most to business users, adjusting its focus. This is how MCP architectures evolve into self-improving ecosystems — dynamic, context-aware, and minimally supervised. In the long term, we will see “autonomous analytics cycles,” where data continuously reorganizes itself to reflect business priorities without manual modeling.

10. Where Human Intervention Still Matters

Despite MCP’s automation, human roles remain critical in:

- Data Privacy & Compliance: Interpreting regulations like PDPA, GDPR, SOC2.

- Validation & Auditing: Certifying AI-generated insights for regulatory accuracy.

- Ethical Oversight: Preventing data misuse and bias.

- Contextual Storytelling: Translating AI insight into strategic boardroom language.

MCP decentralizes data access but not accountability. The more AI systems autonomously analyze and interpret data, the more human oversight becomes essential — not to compute, but to govern. Regulatory environments will demand human certification for AI-derived decisions, especially in finance, healthcare, and defense. The new role of human professionals will be that of AI stewards: curating ethical frameworks, validating data lineage, and translating algorithmic reasoning into language that aligns with business or societal objectives. Far from replacing humans, MCP amplifies their importance — shifting their focus from data collection to data conscience.

11. Recommendations Snapshot for Industry Players

Storage Platforms (e.g., Snowflake): Pivot into AI-native data hubs with MCP-compliant endpoints.

Analytics Tools (e.g., Tableau, Power BI): Evolve into conversational copilots integrated with AI ecosystems.

Data Integration Tools (e.g., dbt, Fivetran): Offer AI transformation governance instead of static pipelines.

Data Consulting Firms (e.g., Kipi.bi): Transition to AI orchestration and compliance advisory, becoming the bridge between enterprise data and autonomous intelligence.

The transition to MCP is not optional — it’s inevitable. Companies that recognize this early can rebrand themselves as the interface between traditional systems and the intelligence layer. For instance, a firm like Snowflake could partner with AI providers to offer “secure reasoning nodes” instead of warehouses. Visualization platforms might evolve into hybrid copilots that generate dynamic visuals based on natural language commands. Consulting firms can redefine themselves as compliance custodians — ensuring that AI reasoning aligns with enterprise ethics and legal frameworks. The future isn’t about owning the data — it’s about owning the context in which data speaks.

12. Case in Point: An HR SaaS as an MCP-Ready SaaS

For a platform like an HR SaaS, MCP could unify operational and investor analytics:

- AI agents pull real-time token sales, hiring trends, and SME adoption data.

- MCP connects directly to backend APIs.

- Results appear conversationally: “Token purchases in Vietnam rose 32% QoQ, led by HCMC at 41% growth.”

This replaces manual dashboards with living, interactive insights — accessible to any stakeholder instantly.

The HR SaaS architecture is naturally aligned with MCP’s design philosophy. Because its data model already emphasizes context — matching skills, subject expertise, and language to hiring needs — MCP can operate as a semantic bridge between business users and platform intelligence.

For example, an investor could query, “Which SME segments show the fastest adoption rate post-token activation?” and the MCP-enabled AI would cross-reference real-time adoption data, hiring trends, and user retention without human mediation. This isn’t hypothetical — it’s the foundation for next-generation HR SaaS analytics: systems that understand themselves and explain their performance dynamically. For platforms like these, adopting MCP early offers a defensible technological advantage and strengthens investor credibility by showcasing real-time transparency and AI-native scalability.

13. The Broader Business Implications

MCP democratizes analytics. No dashboards, no SQL, no analysts required — only secure endpoints and a conversational interface. This means:

- Lower operational costs

- Faster decision cycles

- Real-time market adaptability

Enterprises will move from data ownership to data fluency — where AI understands context as humans do. This shift carries profound implications for business structure and leadership. In the pre-MCP world, data interpretation was centralized in IT and analytics teams. Post-MCP, decision authority decentralizes — every department, from finance to marketing to HR, can access the same depth of insight through natural interaction.

This eliminates the bottleneck of “data intermediaries” and enables true operational agility. Moreover, the economic impact is non-trivial: by removing redundant data infrastructure, enterprises can reduce analytics-related costs by up to 40%, while accelerating strategic turnaround times. In essence, MCP transforms data from a departmental function into an organizational language. The companies that master this fluency first will redefine efficiency benchmarks across industries.

14. The Road Ahead: Toward Autonomous Data Understanding

We are entering an era where data no longer waits to be analyzed — it explains itself.

This mirrors the evolution of cloud computing: slow adoption at first, then rapid, irreversible transformation.

In a few years, “open dashboard” will be replaced by “ask AI.”

And MCP will be the universal layer making that conversation possible.

The adoption curve of MCP will likely follow the same trajectory as APIs in the early 2000s and cloud computing in the 2010s — niche at first, then foundational. Early adopters will be AI-first startups and high-tech enterprises seeking data agility. The second wave will come from regulated industries — finance, logistics, healthcare — where compliance requires contextual understanding.

The final wave will be governments and public infrastructure systems, where data must flow seamlessly across jurisdictions. Within the next five years, MCP could become a baseline expectation in any enterprise architecture, just as REST APIs or SSO authentication are today. Ultimately, MCP will not be seen as a feature — it will be as invisible and indispensable as the network protocols that power the internet.

15. Conclusion

The Model Context Protocol (MCP) is not another data tool — it is the new grammar of digital intelligence. It dissolves the silos of integration, storage, and analytics by enabling direct, intelligent communication between AI and data — structured or not. For traditional firms, the choice is clear: Evolve into the age of AI-native data orchestration, or risk being left in its wake.

The future belongs to those who understand that the next great revolution isn’t about where data lives, but about how intelligence speaks to it. RSC’s foresight into MCP is not simply about predicting a technological trend — it’s about understanding the economic reordering that will follow. As intelligence becomes a service layer, the strategic advantage will belong to organizations that can harmonize governance, technology, and context.

Those who cling to layered architectures will be outpaced by those who enable cognitive infrastructure. This is the inflection point where strategy meets foresight — and where companies must reimagine themselves as living systems of information, not static systems of record. MCP is not the end of data engineering; it’s the beginning of intelligence engineering.