The due-diligence checklist to determine if a production-grade LLM is feasible for your organization, thereby cementing your moat status in the industry

Production-grade LLM systems can be defined as foundation models + organization-specific retrieval (RAG) + enterprise governance & LLMOps — and they are no longer fictional curiosities to empower business. They are becoming core infrastructure for decision-making, regulatory mapping, and competitive differentiation in complex, knowledge-heavy industries. While this is applicable to knowledge-heavy subsets across all industries, this article focuses towards highly regulated sectors such as pharma and biomedical sectors. In this space, the payoff is twofold: faster, evidence-based operational decisions and demonstrably auditable linkages between business processes and regulatory guidance.

Key contextual facts in the past 1-2 years to consider as you read:

- The FDA and other regulators have moved quickly to publish guidance for AI/ML in regulated products and decision-making, stressing lifecycle management, transparency and context-of-use

- Consulting firms and large enterprises are already building internal, production LLMs and copilots – such as McKinsey’s Lilli – proving that knowledge-dense organizations can operationalize internal LLMs at scale

- Retrieval-augmented generation (RAG) is the practical glue: it lets an LLM pull exact, versioned passages from regulatory guidance, SOPs, clinical study reports, or research — avoiding hallucination and creating an audit trail. Early evaluations show RAG’s value for guideline retrieval and mapping tasks across medical documents

This article explains what production-grade LLMs mean in practice, why they are a strategic moat if engineered correctly, and as an example, how pharma and biomedical firms should think about implementation (including governance and validation), and why consultancies and market-research houses should treat in-house LLMs as long-term intellectual capital. In the continued series of RSC blogs, similar articles will be published around production-grade LLM’s potential across other industries.

1. What is a production-grade LLM system — the concrete, three-layer definition

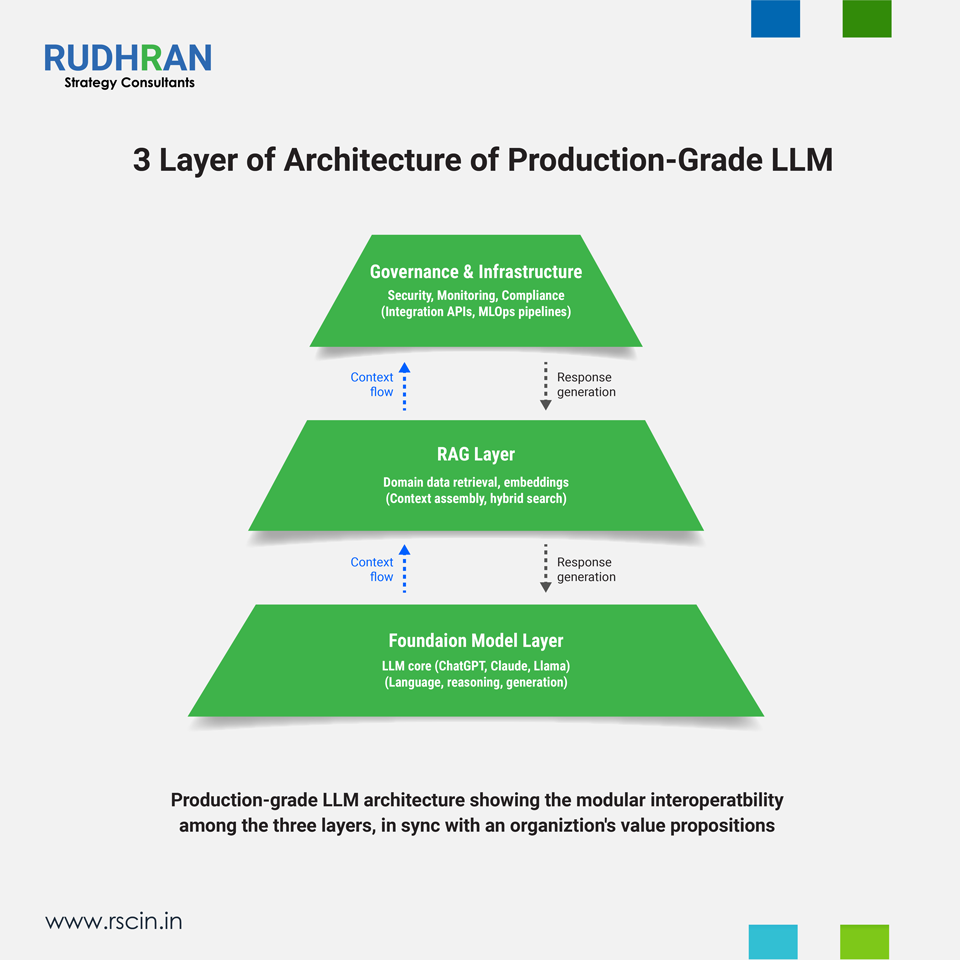

In simple terms, a production-grade LLM system is a reliable, integrated, auditable AI platform that combines three necessary layers as shown below:

- Generative Foundation Model (Intelligence Layer) — the underlying LLM (GPT, Claude, Llama, or a proprietary model) that performs synthesis, summarization, and reasoning

- Organization-Specific RAG (Context Layer) — a retrieval layer that guarantees answers, based on authority and versioned internal sources. These include SOPs, clinical study reports, regulatory guidance, product specs, etc.

- Enterprise Infrastructure & Governance (Trust Layer) — role-based access control, encryption, audit logs, retriever index versioning, human-in-the-loop QA, and LLMOps tooling for monitoring, rollback, and retraining

Put succinctly: Intelligence (LLM) + Context (RAG) + Trust (Governance & LLMOps) = Production-Grade LLM System.

This is not a marketing phrase — but rather an operational checklist that will cement an enterprise operational efficiency and growth. A pure LLM without retrieval or governance is a insignificant in a regulated firm. A retrieval system without a high-quality generative layer produces brittle answers. Only the triad combination delivers both scale and defensibility, surpassing complications created by attrition at middle management and decision-making challenges in top management.

2. The four enterprise capabilities every CEO should benchmark

| Capability | Definition | Typical Enterprise Examples |

| Informational | Retrieves and summarizes knowledge from documents and systems | SOP lookup, policy Q&A, research summaries |

| Navigational | Directs users to the correct system, file, ticket, or dataset | “Where is the latest protocol?”, system routing |

| Transactional | Executes or triggers business actions with controls | Approvals, workflow initiation, record creation |

| Analytical | Synthesizes insights across structured + unstructured data | Trend analysis, root-cause analysis, forecasting |

Any production deployment should be evaluated across four core capabilities — they are what we used in the RSC matrix you reviewed:

- Informational — accurate retrieval and summarization of knowledge (Example, “Which SOP covers batch release for drug X?”)

- Navigational — ability to point users to the right system, folder, ticket, or data source (Example, “Show me the latest stability report for lot Y in the QA portal”)

- Transactional — ability to safely and auditable execute actions (Example, open a change request, flag a batch for recall, kick off a QC test)

- Analytical — ability to synthesize insights from structured + unstructured data (Example, root cause analysis across manufacturing logs + incident reports)

| Platform / System | Informational | Navigational | Transactional | Analytical |

| Microsoft 365 Copilot | Yes | Yes | Yes | Yes |

| SAP Joule | Yes | Yes | Yes | Yes |

| Google Duet AI | Yes | Yes | Yes | Yes |

| ServiceNow AI Search | Yes | Yes | Yes | Yes |

| IBM watsonx Assistant | Yes | Yes | Yes | Yes |

| Salesforce Einstein GPT | Yes | Yes | Yes | Yes |

| Slack GPT | Yes | Yes | No | No |

| Atlassian AI Assistant | Yes | Yes | Partial | Partial |

| Notion AI | Yes | Yes | No | No |

| JPMorgan Internal Copilot | Yes | Yes | Yes | Yes |

| Meta / Google Internal Copilots | Yes | Yes | Partial | Yes |

A production-grade LLM pays dividends only if it supports the right mix of these capabilities for your business. For pharma, Informational and Navigational with absolute traceability are table stakes. Transactional functions must be gated and auditable. Analytical functions must come with statistical provenance followed by human review.

3. Why pharma and biomedical firms are uniquely positioned to benefit

3.1 The opportunity: faster regulatory mapping, smarter trials, and continuous knowledge reuse

Over the last 1–3 years the pharmaceutical industry has faced three realities:

- The regulatory environment for AI/ML and software-enabled devices has tightened and clarified (FDA lifecycle guidance, submission recommendations) that firms to show rigorous lifecycle, validation and transparency for AI elements

- Drug discovery, clinical trial design, and medical affairs workflows now generate massive volumes of unstructured documents (protocols, CSR text, investigator brochures, post-market safety narratives) — ripe for semantic indexing and retrieval

- The commercial and quality cycles are time-sensitive: mapping a new guidance to SOPs manually is slow and error-prone, risking noncompliance or delayed launches

| Regulatory Trigger | RAG Input Sources | Internal SOP Impacted | Output Generated | Human Validation Required |

| New FDA draft guidance | FDA guidance documents | Quality SOPs | SOP gap analysis report | Yes |

| Revised AI/ML guidance | FDA + ICH documents | Clinical SOPs | Change recommendation draft | Yes |

| Inspection readiness | FDA + internal audit logs | Compliance SOPs | Audit response mapping | Yes |

| Post-market surveillance update | FDA safety guidance | PV SOPs | Reporting workflow updates | Yes |

Production-grade LLMs with RAG solve these precisely: They allow a regulatory specialist to query “Which SOPs need updates to comply with new FDA AI-in-decision guidance?” and get a prioritized, cited list linking the exact guidance paragraph to internal SOP sections — with a human-review workflow attached. This reduces time-to-compliance from weeks to days. One can see RAG evaluations for regulatory document retrieval showing high precision on these tasks

One can expect to have the following concrete pharma use-cases (realistic and near-term):

- Regulatory mapping & gap analysis. RAG retrieves sections from FDA guidance and highlights mismatches against SOPs, generating annotated draft change requests for QA owners

- Clinical operations support. RAG brings together prior trial protocols, CRF templates, and safety narratives to recommend inclusion/exclusion changes or matching historical comparators — accelerating protocol design

- PV (Pharmacovigilance) drafting. LLM drafts event narratives from structured ADR fields; RAG ensures narratives cite correct guideline language and internal reporting timelines

- Manufacturing deviation triage. When a deviation occurs, the system retrieves related batch records, SOP steps, risk assessments and suggests immediate containment steps — with traceable citations

3.2 The Risk: “Hallucinations” are regulatory poison — governance matters

The single greatest wrong turn is to let an LLM produce un-sourced assertions in a regulated context. Regulators want traceability: what source justified your recommendation? RAG addresses this by returning passages and metadata; enterprise governance ensures only approved sources are indexed. Several peer-reviewed works and proofs-of-concept show RAG dramatically reduces unsupported assertions in medical and regulatory queries — but they also stress human review and qualification for each use case.

4. How RAG is used today in pharma to map FDA guidance to SOPs

Below is a reproducible, practical workflow many regulated firms should adopt as a minimum viable compliance deployment.

Step 1 — Authoritative source inventory

Ingest official regulator texts (FDA guidance, ICH docs), internal SOPs (versioned), QMS records, and selected external standards (USP, ISO). Tag with metadata: date, revision, applicability, and owner.

Step 2 — Indexing & access rules

Create a retriever index but limit the index to approved documents and specific folders. Maintain index versioning and snapshot every publication date for audit.

Step 3 — Querying & mapping

The user asks: “Which SOPs are impacted by FDA Draft Guidance X (Jan 2025)?” The RAG pipeline retrieves relevant guidance paragraphs and the SOP sections that share semantic similarity. The RAG then synthesizes a mapping report with citations and flags gaps.

Step 4 — Human validation & change management

Assigned QA/regulatory owners review AI-suggested mappings, accept or modify them, and generate a validated change request in the QMS. The system logs reviewer decisions as part of the audit trail.

Step 5 — Continuous monitoring

Deploy monitors that detect new regulatory releases, alert the retriever team, and trigger re-indexing and re-mapping as needed.

Why this works in practice: The regulatory text rarely changes every day; RAG avoids re-training models because it pulls the current text dynamically. FDA guidance explicitly expects context-of-use documentation and lifecycle management for AI — exactly the artifacts this workflow produces.

Multiple recent evaluations show that RAG architectures outperform vanilla LLM queries for regulatory retrieval tasks and question answering across FDA guidance, dramatically improving precision and source citation rates.

5. Implementation playbook: A minimal viable production-grade LLM for pharma

Below is an executable playbook: a 12–16 week program to stand up a pilot production-grade LLM for regulatory mapping and SOP gap analysis.

| Phase | Duration | Primary Owner | Key Deliverables | Risk If Skipped |

| Executive alignment | 1–2 weeks | CEO / CCO | Use-case charter | Misaligned expectations |

| Data scoping | 2–3 weeks | Regulatory / QA | Approved data corpus | Compliance failure |

| RAG indexing | 2–3 weeks | IT / Data | Searchable knowledge base | Hallucinations |

| Model integration | 3–4 weeks | AI / IT | Grounded responses | Unreliable outputs |

| Validation & audit | 2–3 weeks | QA / Legal | Validation reports | Regulatory rejection |

| Controlled rollout | 2–3 weeks | Business owner | Measured adoption | Trust erosion |

Phase 0 — Executive alignment: CCO or Head of Regulatory should define success metrics that include time to map a guidance to SOPs, percentage of mappings accepted without edits and audit readiness. The executive team should also appoint an AI oversight committee that supervises regulatory, QA, IT and legal information exchange.

Phase 1 — Source & scope: Determine initial scope and volume of quality management systems (QMS) and SOPs on a weekly or monthly basis. Define data security access rules, and retention policy.

Phase 2 — Indexing & retrieval: Prepare ingestion pipelines, normalize text, apply metadata tagging, and build vector indices.

Phase 3 — LLM & prompt engineering: Pick a foundation AI reasoning model that best fits your organization’s high-volume data. Build grounding prompts that request citations and evidence.

Phase 4 — UI & workflow integration: Create a simple UI for regulatory specialists that shows the query, retrieved passages, and confidence scores.

Phase 5 — Validation & auditing: Conduct sample tasks, compare AI outputs vs. manual mappings, measure precision and test audit report generation.

Phase 6 — Controlled rollout: Deploy to a regulated user group, log usage, and iterate.

Minimum deliverables should include:

- An index of approved regulatory & SOP documents

- The RAG pipeline with transparency

- A governance playbook with varied administrative privileges

- An MLOps monitor to watch for concept drift and new data.

6. Validation, auditability, and regulator expectations

Regulators insist that systems influencing regulatory decisions or safety should have defined contexts of use and documented validation frameworks. The FDA’s recent documents emphasize lifecycle management and submission recommendations for AI-enabled device software functions — all of which demand that firms can show provenance and change control for AI outputs.

RSC recommends the following validation approach:

- Articulating a traceability matrix, as a standard linking each AI decision, to the source document followed by reviewer sign-off

- Establishing test cases for retriever to ensure it returns the canonical source for appropriate queries, based in priority

- Enforcing human supervision to verify KPI’s are correctly identified. If not, then it should be manually rewritten

- Publishing versioned indices and data lineage to substantiate authenticity for every RAG based output

- Executing penetration tests and privacy certifications if the index contains personal and sensitive data

The above recommendations turn the AI system from a ‘wild’ generator into an auditable engineering artifact.

7. Beyond pharma: why market-research and management consulting firms should build in-house production LLMs

Consultancies and research houses live on institutional memory — decades of slide decks, business insights, whitepapers, client deliverables, and proprietary benchmarks. A properly built in-house production-grade LLM transforms that memory into continually productive capital. An in-house LLM can enable consulting / market research firms with:

- Instant precedent retrieval: For example, you can query the firm’s knowledge repository with the following prompt: “Show past engagements on direct-to-physician programs in APAC, including pricing model and outcome metrics.” The system returns exact slides, notes, and client summaries, with citations

- Hypothesis generation using historical context: Using prior engagements across industries, the LLM suggests playbooks adapted to the client’s context, noting which recommendations historically correlated with efficient ROI

- Proposal drafting & risk checks: Draft proposals from prior winning ones and auto-flag regulatory or geopolitical concerns based on the region and sector

- Quality control & IP compliance: Ensure no personally identifiable information (PII) or restricted content is suggested by configuring retrieval filters and human supervision mandates.

| Consulting Activity | Traditional Approach | LLM + RAG Enhancement | Strategic Advantage |

| Market analysis | Manual research | Instant precedent retrieval | Faster insights |

| Strategy design | Partner-led synthesis | Pattern recognition across decades | Consistency |

| Proposal creation | Reuse past decks | Auto-drafted, validated proposals | Win-rate uplift |

| Due diligence | Analyst-heavy | AI-supported synthesis | Speed + depth |

| Knowledge retention | Tribal knowledge | Institutional memory system | Compounding IP |

Why this is an economic moat?

Because the LLM does not replace consultants, but rather amplifies their work credibility. The firm’s decades of insights become query-based, data capital. Over time, the system compounds knowledge, and each closed engagement becomes another retrievable precedent, improving future recommendations. That is true competitive advantage of monumental proportions.

Practical evidence: consultancies already doing this

Several top firms have built internal generative tools. McKinsey’s “Lilli” is an example of an internal generative assistant that draws from decades of knowledge repositories. Large consultancies develop both in-house platforms and selectively partner with technology vendors to scale faster.

8. Common engineering & legal pitfalls that enterprise management should know

Pitfall 1 — Index leakage: Sensitive client materials accidentally become available to other clients or public indices. Strict tenant isolation, document classification, and retrieval filters must be enabled. Provisions must be put in place such that audit logs show who accessed what with date and time stamps.

Pitfall 2 — Unqualified automation of transactional actions: An LLM can trigger workflow without QA signoff. Transactional actions therefore must be gated with human-bench approvals, digital signatures, and explicit audit records.

Pitfall 3 — Validation bias or incompetence: It is a risk to run limited tests and claim production readiness. Firms must ensure realistic scenarios, measure human validation workload, conduct blind comparisons with human experts, and document acceptance criteria. Such protocols also cement firms against robust regulatory audits.

Pitfall 4 — Underinvesting in retriever design: Poor indexing yields irrelevant results, causing user distrust. Firms must invest in hybrid retrievers and tune embeddings to biomedical/pharma knowledge repositors as applicable.

Pitfall 5 — A non-robust LLMOps: This is probably the most important pitfall. Models can degrade or drift unnoticed. Architects must craft instrument alerts on key metrics (precision, rejection rates, confidence distribution) and run scheduled index re-captures.

9. Cost & resourcing model

Costs vary widely by scope, and it is premature to lay out costs accurately given the complexities and challenges each industry represents. A realistic 4-6 quarter plan includes spending across three buckets:

- Data & Indexing (20–30%) — document ingestion, metadata work, controlled storage

- Model & Platform (35–50%) — model access, inference costs, compute for private deployments, redundancy

- Governance & Ops (20–30%) — LLMOps, audit tooling, compliance engineering, human validation workforce

For pharma firms, additional line items include validator staff, audit readiness, and possible on-prem hosting for sensitive datasets. While initial implementation is expensive, the recurring value – time saved on regulatory mapping, faster protocol iteration, reduced inspection risk – can optimistically return investment within 3 years. It is imperative for firms – whether pharma, biomedical or consulting services – to execute a cost-benefit analysis based on their R&D budgets and client’s high-frequency service pipelines.

10. Measuring success of your production-grade LLM: KPIs that matter in Pharma / Biomedical space

- Time to regulatory mapping (baseline vs. AI assisted) — days saved

- Percentage of AI-suggested mappings accepted without change — precision in context

- Mean time to generate compliant protocol drafts — for clinical operations

- Number of auditor queries resolved within SLA using AI reports — inspection performance

- Utilization and trust scores among domain users — adoption metrics

- Portal of provenance — how many outputs include direct citations and retriever source IDs

11. A short case vignette

The Case: A mid-sized biologics firm receives an FDA draft guidance on post-market surveillance for AI-assisted diagnostic elements. They must update 18 SOPs across pharmacovigilance (PV), QMS, and labeling before a planned product launch.

Manual approach: Cross-functional teams take 6–8 weeks to map guidance paragraphs to SOPs and produce redlined drafts.

Production-grade LLM approach using RAG + governance:

- The firm’s compliance lead queries the guidance in a single UI

- The system returns all SOP sections with similarity scores and highlights which SOPs lack required reporting windows

- The QA owner receives pre-populated change request drafts with exact guidance citations, reviews and approves

- Net time to produce validated change requests: 6 business days

- Outcome is faster launch timeline and a detailed audit trail for regulators

12. 3 pilot projects RSC recommends for pharma / biomedical firms

- Regulatory mapping & SOP gap detection — high impact, clear auditability. A 6–12 week pilot

- Clinical protocol drafting assistant — combines trial templates, historical protocols and safety narratives. An 8–14 week pilot with robust human validation

- Manufacturing deviation triage — integrate batch records, shift logs, and SOPs for rapid containment guidance. A 10–16 week pilot with transactional gating

Each pilot should be scoped to a single business unit and produce measurable KPIs.

13. The strategic question: build vs. partner vs. hybrid – what should you opt for?

Building an in-house production-grade LLM: This establishes the highest long-term control and proprietary value, but requires substantial data engineering. LLMOps and governance investment will be heavy and one expected an ROI in less than 3 years. It is ideal for large firms and consultancies with decades of IP and knowledge repositories

Partner (SaaS / vendor): This pathway ensures the fastest time to value, lower initial capital outlay. However, there is the possibility of vendor lock-in and less control over index/data portability. RSC recommends firms to choose only those vendors that support strict data residency and audit logs.

Hybrid: Your firm can host your index and governance controls in-house while using third-party foundation models under strict contracts since it balances speed and control. RSC often recommends a hybrid approach as an initial step: index in-house, use vetted LLM providers with strict SLAs, and migrate to an on-prem model only when usage and value justify the switch.

14. Why boards should care — the Moat Argument

Your competitors will eventually adopt similar tools. But the firm that compounds its institutional knowledge through a production-grade LLM is not merely automating tasks bur rather converting time, decisions and precedents into a persistent, query-enabled asset.

In simple terms, data becomes capital when it’s retrievable, contextualized, and used to make decisions at speed. Each validated output becomes another retrievable precedent — compounding the firm’s cognitive capital. Such a compounding effect creates asymmetric advantage in the guise of faster launches, fewer inspection surprises, and better client recommendations (for consultancies), and a defensible operational moat.

15. Final checklist for CEOs — is your organization ready?

For starters, the CEO or executive management can use this due diligence list:

| Dimension | Key Question | Status-To be filled by owner | Owner | Risk Level |

| Strategy | Clear business use case defined? | CEO | High | |

| Data | Clean, versioned data available? | CIO | High | |

| Governance | AI oversight defined? | Legal / QA | High | |

| Security | Access & isolation enforced? | CISO | High | |

| Validation | Human-in-loop process defined? | QA | High | |

| Infrastructure | Scalable AI stack ready? | IT | Medium | |

| Change readiness | Teams trained & aligned? | HR | Medium | |

| ROI clarity | Metrics & payback defined? | Finance | Medium |

If you check 8 of 10 boxes, you have the foundation for a production-grade LLM program worth investing. You can also avail RSC’s AI strategy services to execute a RAG audit in 1-2 months’ time – depending on the size of your knowledge repository, workforce readiness, and service value propositions – to confirm whether or not a production-grade LLM is imminent to your organization. Our experience in the past two years have led to the conclusion that enterprises don’t fail at AI because the technology doesn’t work. They fail because execution is misaligned with reality.

16. Conclusion — immediate next steps

- Select one high-value pilot (regulatory mapping or clinical protocol drafting)

- Form the cross-functional team (Regulatory/QA, Clinical Ops, IT, Legal)

- Define success metrics and governance and sign a 12–16 week pilot charter

- Technical kickoff: ingest 100% of the scoped documents, build your retriever, and require that every output contains at least one retriever citation

- Report results to the board and adjust scale plan (build vs partner)

If your organization is a consultancy or market-research firm, treat the LLM as a product — it should have a product owner, roadmap, and monetization/usage metrics. For pharma / biomedical firms, treat it as regulated software with explicit validation and lifecycle controls.